Atyeti designed a custom architecture leveraging AWS services and cutting-edge AI methodologies to automate and scale the reconciliation process.

"Financial Reconciliation Automation using AWS Outposts + On-prem"

The Need

A leading investment bank sought to overhaul its manual-intensive financial reconciliation pipeline. With over 40,000 unique reconciliations (recons), the existing system supported only 200 due to overwhelming maintenance overhead. The rules-based system, which serviced just 0.25% of recons, required four weeks to onboard a single new pipeline and could not scale to address the 100 million manual touchpoints per year (MTPs). The client needed an automated, scalable, and efficient solution to reduce onboarding time, increase coverage, and eliminate the cost and time burden of MTPs.

The Approach

- AWS EC2 for rapid, on-demand compute, enabling scalable parallelization of training (onboarding) and inference tasks.

- AWS Outposts and S3 to seamlessly integrate on-premises data with cloud scalability.

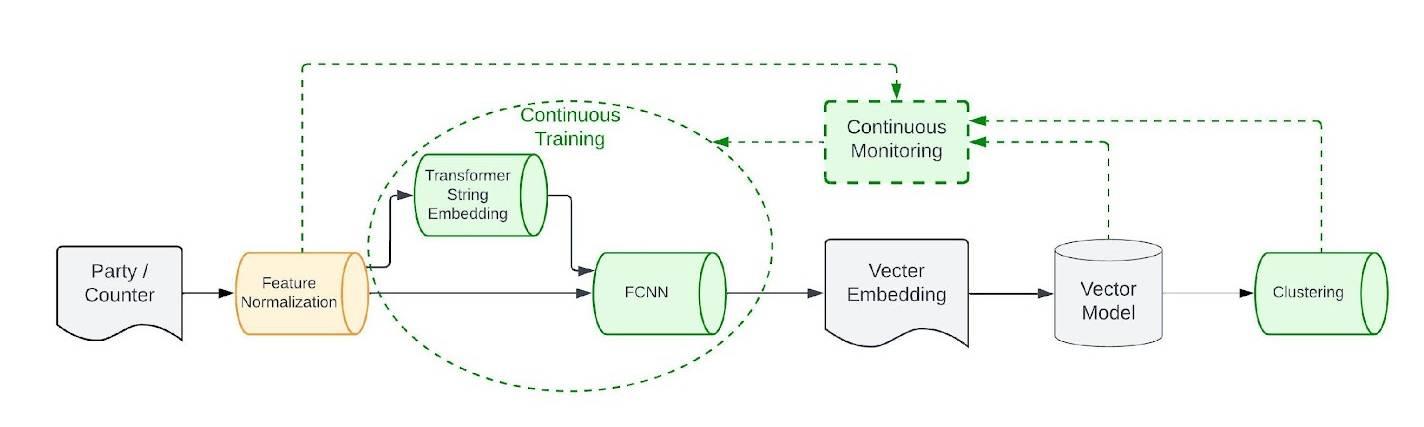

- A six-transformer architecture aligned through contextual training to extract complex indicator patterns from multiple unstructured fields.

- A custom clustering algorithm, combining vector embeddings, a modified SNN DBSCAN variant, and graph analysis, to perform high-volume matching with low computational complexity.

- Close collaboration with reconciliation SMEs to encode guardrails and logical isolations, ensuring high accuracy and relevance.

The Process:

The team worked to align the transformers' contextual understanding with domain-specific needs, ensuring accurate text-based indicators for matches. Custom clustering techniques provided scalable processes for high-n bulk matching. Additionally, automated retraining mechanisms were designed to enable continuous learning and adaptation of the system.

Result

- Scalable onboarding of up to 40,000 reconciliation pipelines, reducing onboarding time from four weeks to just two hours—a 99.7% improvement.

- Coverage per reconciliation increased by up to 250%, reducing manual touchpoints and increasing operational efficiency.

- Inference time reduced by 99.5%, from four hours to 1.2 minutes, enabling rapid decision-making.

- A parallelized, continuous monitor/training architecture ensures long-term scalability and adaptability with negligible overhead.